Rapport Advantage Assessment Validation Study Results

DISC Model of Human Behavior

DISC Assessment Validation Study Summary

(calculations performed by Thomas G. Snider-Lotz Ph. D., Psychometric Statistician)

A validation study was performed on the Personality Insights style assessment instrument in order to determine its psychometric characteristics. The instrument was administered to 500 people in order to have a good statistical sample.

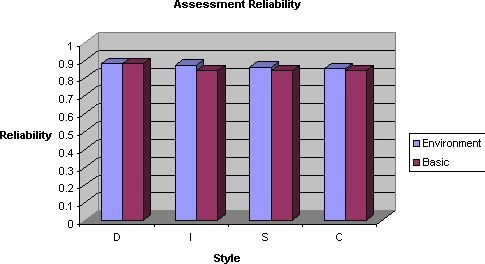

Reliability Data

Reliability is simply a measure of how consistent the results are. When a person takes an assessment that is reliable, the results are consistently the same or very close to the outcome. An unreliable instrument would yield results that are widely scattered. If the instrument is reliable, we can be confident that its actual score is close to its "true score."

Reliability is measured on a scale of 0-1 where 0 represents completely unreliable and 1 represents completely reliable. For this analysis, a reliability score in the upper .70's is considered good. As you will see in the graphs below, reliability results ranged from 0.84 to 0.88 - indicating a reliable assessment.

Validity Data

Validity is a measure of whether an instrument is appropriate for the use to which it is put. The purpose of the Discovery Report assessment is to classify people in terms of "DISC" behavioral patterns. The terminology for this type of validation study is called "construct validity." Construct validity can be measured by input from experts in the field of "DISC" behavioral styles. Another measure of construct validity is the degree to which the assessment results agree with outcomes generated by other widely accepted instruments.

The Discovery Report assessment instrument was formulated by experts with many years of experience with the DISC model of human behavior and 2) The Discovery Report assessment instrument was shown to have a relatively high correlation with a widely accepted instrument. A high correlation indicates high construct validity. Please refer to the graph below to view the validity results.

Standard Errors of Measure

Standard errors of measure (SEM) are an indication of the consistency of an instrument based on its own point scale. Assessment results are plotted on a scale from 0-24. The SEM of the Discovery Report assessment tool ranged from 1.6 to 2.0, indicating that a person who took an assessment over and over is not expected to get results that vary in any one category by more than 2 points. This is a further indication of construct validity.

Actual User Feedback

After thousands and thousands of assessments given online and at live seminars, we can report that user feedback is that the reports are useful for the intended purpose (personal growth and people-skill awareness). Such empirical data is a consideration for the validity of the assessment tool. Well over 90% of the participants offer very positive feedback. The rare cases where a participant has disagreed with the assessment results are less than one-tenth of one percent. We are consistently told that our "Discovery Reports" are helpful and accurate.